Table of Contents

What is Developer Tools or DevTools?

“Developer Tools,” often referred to as “DevTools,” is a set of web developer tools integrated into modern web browsers like Chrome, Edge, Mozilla, and more. DevTools can help you edit pages on the fly and diagnose problems quickly, which ultimately helps you build better websites, faster.

In this tutorial, we will explore how web scrapers can utilize DevTools for web scraping purposes.

You can use DevTools in any browser, as the core functionality remains the same across most browsers. But for this tutorial, we’ll focus on Chrome. Once you’re familiar with it in Chrome, you’ll find it easy to use in other browsers too.

How to open DevTools?

There are different ways to open DevTools in Chrome.

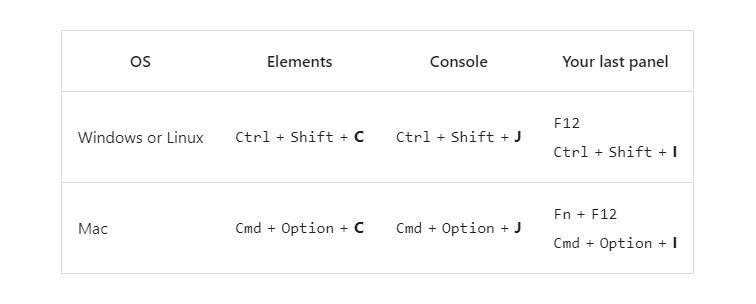

By Shortcuts

If you prefer keyboard, press a shortcut in Chrome depending on your operating system.

By UI

To access DevTools, you can simply right-click anywhere within the browser window and select “Inspect” from the menu that appears.

Understanding the Components of DevTools

There are many tabs and options in DevTools, but we’ll focus on only those we need for web scraping.

We will focus on only three tabs.

- Elements

- Console

- Network

1. Elements Tab

The Elements tab in DevTools is a tool that allows you to view and edit the HTML elements of a web page. It is one of the most important tools for web scrapers, as it allows you to inspect the structure of a web page, check the properties of elements, and make changes to the code.

Here is what you have to learn about the Element tab for web scraping.

To inspect different elements on a web page

When you are scraping a website, you need to inspect that element in the HTML tree.

For Example:

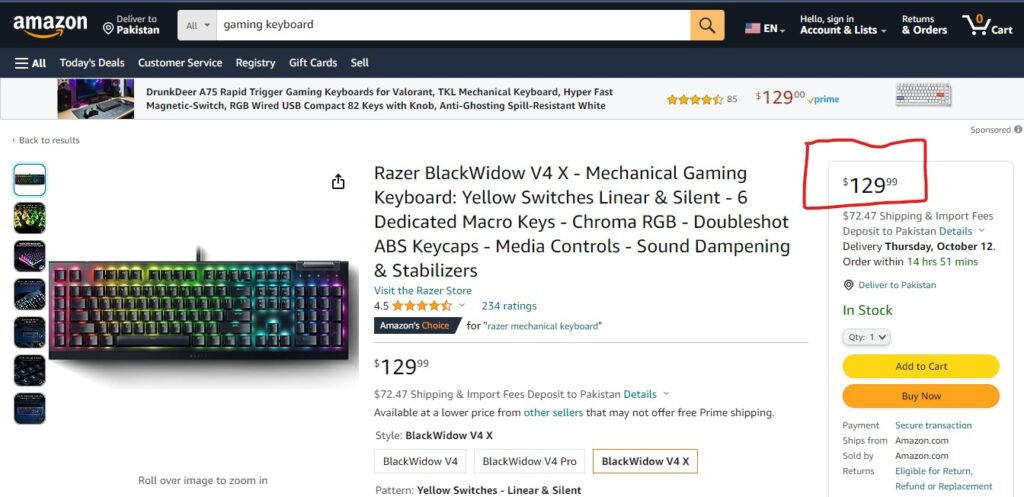

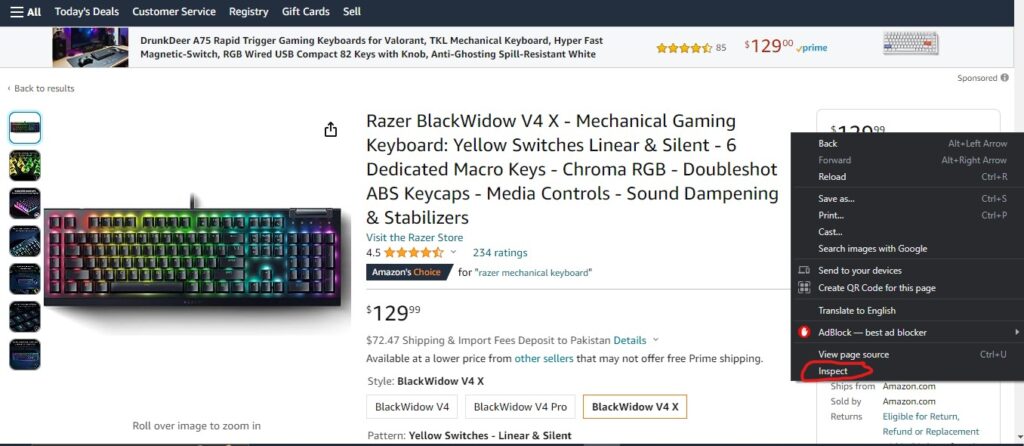

Consider this Amazon page. Let’s suppose you want to scrape the price of the keyboard. For this you will first need to inspect that element, to see its HTML code.

To inspect the element, right-click on the price, and then select Inspect from the options.

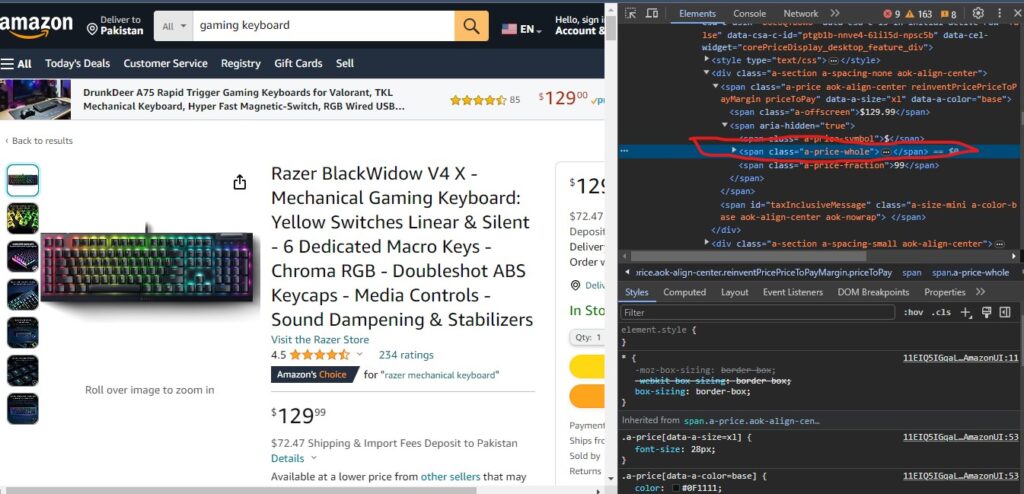

After this browser will take you to that element in the HTML document

As you can see now you have the element in front of you, that you want to scrape.

To get the properties of elements

The second most important use of the Element tab is to get the properties of elements, with which we will access that element by parsing the page.

Properties are present in the HTML opening tag, you can simply copy these properties. For example in the above price element, there is a class attribute, you can copy this class, by double-clicking on the class value.

Similarly, If an element has multiple properties, you can copy those in the same way

2. Console Tab

Trust me, mastering the console tab for web scraping will make your scraping job a whole lot easier!

For Testing CSS Selectors

Most of the time, web scrapers spend a significant amount of their time on element selection. This process can be quite time-consuming because you often don’t get it right on your first attempt. You start the scraper, realize it’s not accessing the desired element, and then you have to tweak the selector. This trial-and-error approach not only consumes a lot of time but can also be frustrating.

You can utilize the Console tab to address this issue. Here’s how:

You can use JavaScript in the Console tab to check if your selector is grabbing the right elements. Don’t worry if you’re not a JavaScript expert; a few simple lines can do your whole work.

For example:

Let’s consider this element that you want to retrieve. This is the element of price that you saw in the above picture.

<span class="a-price-whole">129<span class="a-price-decimal">.</span></span>

Now, before going to write your code, test your selector.

For example, The selector you have made for this is “span.a-prie-whole“.

Now, open the console tab of devtools on the same page. and test this selector by this line of Javascript.

document.querySelector("span.a-price-whole")

Now, if your selector is correct, you will see an element, otherwise, it will return null. You can assume that your selector is incorrect for that element. Then you can change your selectors accordingly.

For Getting Data From Javascript

When you are scraping the website, most of the information that you required are present in Javascript Objects in the page source. To accomplish this, you can use ‘Console’ tab, to interact with and extract data from these objects.

Follow these steps to do this:

- Locating Data in JavaScript Objects:

- Open Page Source: Begin by navigating to the page source. You can open the page source by shortcut ‘Ctrl + U’ or by clicking the right button of the mouse on the page and then choose ‘view page source’ from the options.

- Search for Data Terms: Use the ‘Ctrl + F’ (or ‘Cmd + F’ on Mac) keyboard shortcut within the ‘Elements’ or ‘Elements Inspector’ panel to search for specific data terms you expect to find in JavaScript objects. These terms might include product IDs, prices, or any other relevant data.

- Accessing Data from JavaScript Objects:

- Identify Objects and Keys: Once you’ve located a relevant data term within the page source, identify the JavaScript object(s) that contain this data. JavaScript objects often resemble key-value pairs, with each key representing a property and its associated value.

- Write JavaScript Code: In the ‘Console’ tab, you can write JavaScript code to access and fetch the desired data. For example, if the data you need is stored within an object named ‘productData,’ and you’re looking for the product price, you can write JavaScript code like this:

// Access the product price from the 'productData' object

var productData = { /* ... */ }; // The actual object structure may vary

var productPrice = productData.price;

console.log(productPrice);

It will give you product price from the object.

Now, you have to use this code in your code. In Selenium you can use the ‘execute_script’ method to execute javascript code.

from selenium import webdriver

# Initialize the WebDriver (you may need to specify the path to your webdriver)

driver = webdriver.Chrome()

# Navigate to the web page

driver.get('https://example.com') # Replace with the URL of the web page you want to scrape

# Execute JavaScript code to extract data from JavaScript objects

js_code = """

var productData = { /* ... */ }; // The actual object structure may vary

var productPrice = productData.price;

return productPrice;

"""

# Execute the JavaScript code and retrieve the result

product_price = driver.execute_script(js_code)

# Print the extracted data

print("Product Price:", product_price)

# Close the browser

driver.quit()

Note: If you intend to retrieve a value when using JavaScript code in Selenium, remember to include the return statement in your code.

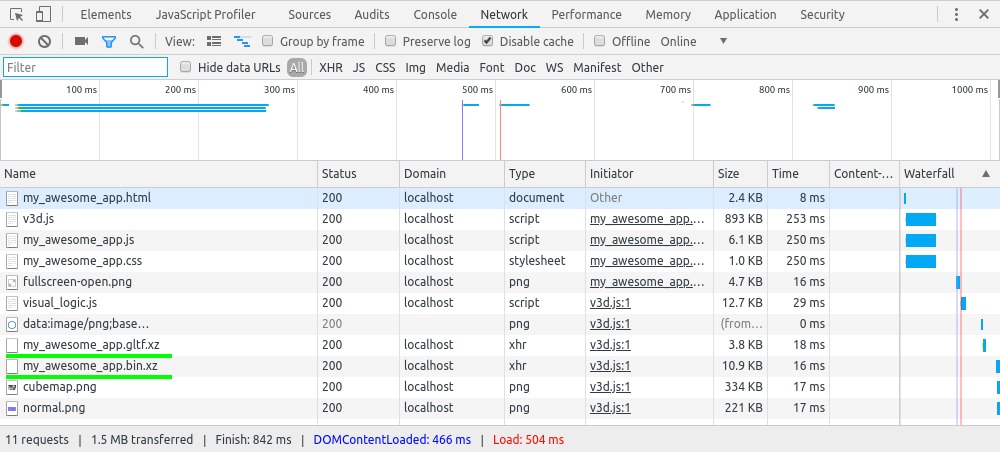

3. Network Tab

The “Network” tab in a web browser’s developer tools is a panel that provides detailed information about network activity related to a web page. It allows web developers to monitor and analyze HTTP requests and responses, which include data transfer, resource loading, API calls, and more.

To check hidden APIs

The Network tab is mostly used to check any hidden APIs in the web page, that a website is using to communicate with its server.

If we find any hidden API, we can use it to scrape data directly from it, instead of parsing HTML.

I have already taught, how to scrape data from APIs using “requests” in our first tutorial

How to check hidden Apis?

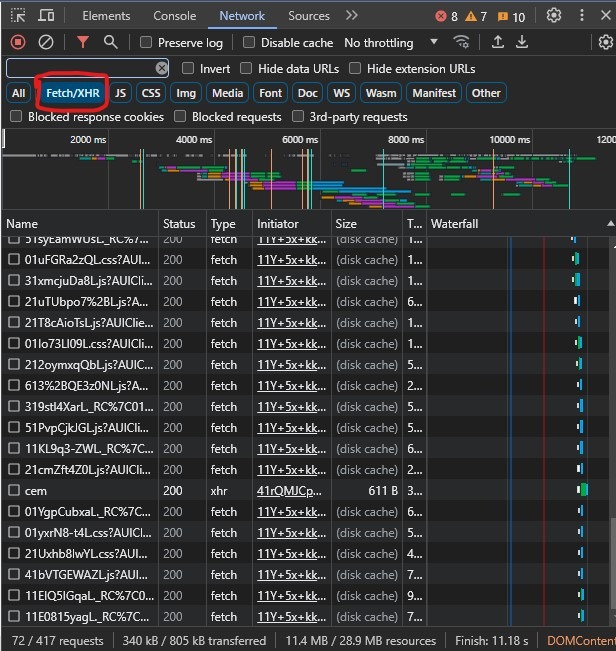

First of all open the network tab in DevTools, and refresh the page.

Now you will see all the HTTP requests, that page is generating.

API requests are categorized as XHR (XMLHttpRequest) or Fetch requests. You can view only these specific types of requests by clicking on the Fetch/XHR button.

API requests commonly exchange data in JSON format. You have to check each request to identify the specific request whose response contains the JSON data you need. Once you’ve identified the right request, open it to access the API’s endpoint. With the endpoint, you can then proceed to scrape the data directly from the API instead of parsing the HTML.

Here are some important considerations when working with hidden APIs.

- Sometimes, you may find a hidden API that requires authentication for data access. In such cases, you cannot retrieve the data without proper authentication. Authentication is typically achieved through tokens stored in your browser’s cookies or local storage.

However, not every hidden API requires authentication. - Your required Hidden APIs are not present on every website.